Monkey Bubble deploys Video Transport for remote commentary in esports

Monkey Bubble is a leading esports video production company based in the city of Breda in the Netherlands – primarily focused on Blizzard titles like Overwatch. We had a great conversation with Sven van Vossen, who said his unofficial title would be “make stuff work”.

We have a physical studio in Breda. That studio is set up in a way that no one actually has to be there. Everyone is remote.

What is Monkey Bubble?

Monkey Bubble started as a hobby project that got quite out of hand. We started with esports, and that's still the one thing we do. Just before COVID we started working on a proper remote solution. And because of that we already had everything in place before COVID hit. The pandemic stress-tested the approaches we already used.

Our core team is six people. Three of us are Dutch. We have someone from the UK, someone from Australia, and someone from the United States. Most of the work we do is on the West Coast, so I tend to leave work around morning rush hour. But as a team we are available around the clock.

Who are your customers?

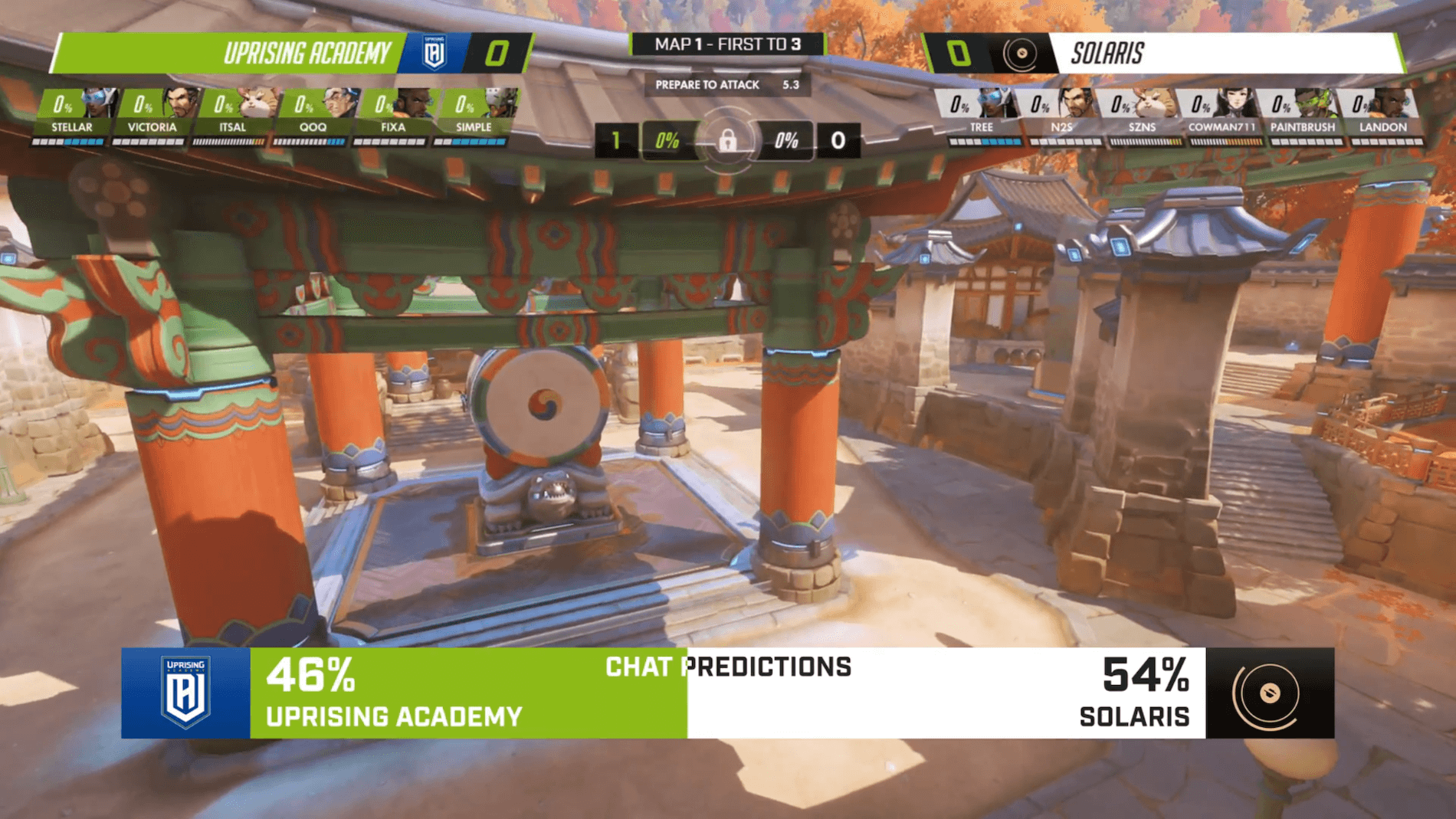

We work with game developers directly. Overwatch Contenders is our main game title. Lately we have been able to work with collegiate esports, which are growing rapidly, especially in the United States. Besides that, we do charitable events and fundraisers, events to promote inclusivity for marginalised groups, and so much more.

What do your customers value in your services?

I think it's, first of all, quality, but that's everyone. I think a lot of the value is actually in project management. We have a bit more expertise in how to set up these events, how to handle a lot of the back end things that a normal production company actually does not have. Our strength is in thinking about the full scope of a project and its brand. As a result, we help make sure the entire event runs smoothly as opposed to just taking care of the broadcast part.

What are the specifics of esports production?

In esports most of the time events are completely remote. Players will be at home, talent will be at home, productions are often in another country. You cannot just walk up to someone.

Another thing is timing. In esports multiple matches would happen within a day, which gives you fairly little time to manage the workflow. You have to manage participants, making sure they are on time even when you are half an hour early or half an hour late. It's actually quite a large differential from, let's say, a soccer match which goes on for 90 minutes and can be extended by three or four minutes. Or, in some rare circumstances you get a longer extension, but in essence you know in advance how long a match is going to be.

So, in esports that time when you're really busy and focused on a production can take the whole day. I just came back from a production that took two days in a row. On Tuesday, I will leave my place at 2 pm in the afternoon and then return home at 8 am the next morning. It’s often because we do EU and NA regions back to back. So, very long working days.

What was the job that led you to Video Transport?

So, in an esports match, you tend to have multiple observers. Observers are the viewpoints inside the game: you have some that do first person views and some that do free cam, that are run by separate people who specialize in capturing the look and feel of a match. It is as if each player had a GoPro camera attached to them, plus several camera operators in the field. And you switch between those during production. This allows you to switch to the action where needed, and you can follow multiple teams at the same time.

We were looking for a solution where you could easily get their game capture feeds, but also keep them synchronized. If you have multiple observers in a single match, getting those feeds in synchronized was actually quite a challenge. In a very fast game people will notice the fact that you switch a second into the future, or a second into the past. We needed something where we could set the delay properly, but also adjust it on the fly. That is what we originally used Video Transport for.

At some point, we started running the games in the cloud. That allowed us to bypass sending fees across the world altogether, and we started using VT for feeds to talent – that is, sending feeds to commentators in high quality, but still low latency.

We also use it for return feeds to producers at home. For instance, our TD is in Australia. He uses it to grab a bunch of return feeds and multiviews for his studio setup at home.

What were the drivers that made you prefer our product to other solutions?

It's the quality plus low latency combo that really does it. Most games are 60 FPS. Our main title is Overwatch, which is a very fast-paced but also very particle-heavy title. In such games the difference is very noticeable. And it's a much better experience to commentate a fluid game.

In your solution, even in the web feed, you can up the bit rates to ridiculous levels for a webcam. And most commentators in esports have good Internet. The feed we send to commentators is around 15 Mbps HEVC. And that's about the level of quality where, for example, names become readable.

How often is Video Transport used by your team?

I'd say, we use it in every production, and we have productions about three times a week.

What have you achieved with our product?

We were the only team in Overwatch, who were using multiple observers synchronized in a remote environment. We were basically the first to do that. I guess that's a large achievement in that regard.

Today, the wins are mainly speed and ease of use. Because in the end, everything VT does, you can do yourself. Sending SRT feeds across or making web feeds – you can all do that, but it’s the time I don't have to spend setting all that stuff up. That’s the value. Getting people on board is quick, getting new feeds sent out is quick. You need those two Dante audio channels? Sure, let's add them. VT makes us more productive, more efficient as it reduces setup time.

What do you like most about the product?

I like the fact that it just works together with NDI and SDI. The fact that I could just get a source or whatever type and send it out is very useful.

At the start one of the big things we used it for was the fact that you can dynamically set the latency. You don't have to constantly stop and restart the feed to set the exact delay you want – because it updates.

But I think the biggest value that I see is just the fact that it works together so well with all sorts of different protocols. Even adding Dante audio channels here and there, or taking X feed with Y audio and not having to make that entire feed somewhere else and then send it out… I think that's a big one.

How would you describe the product to a friend or a colleague?

In fact, this question, how do I get NDI to X is something I've come up with quite a few times. And that’s what Medialooks does: literally getting stuff from A to B properly, while also staying within an NDI workflow.